Abstract

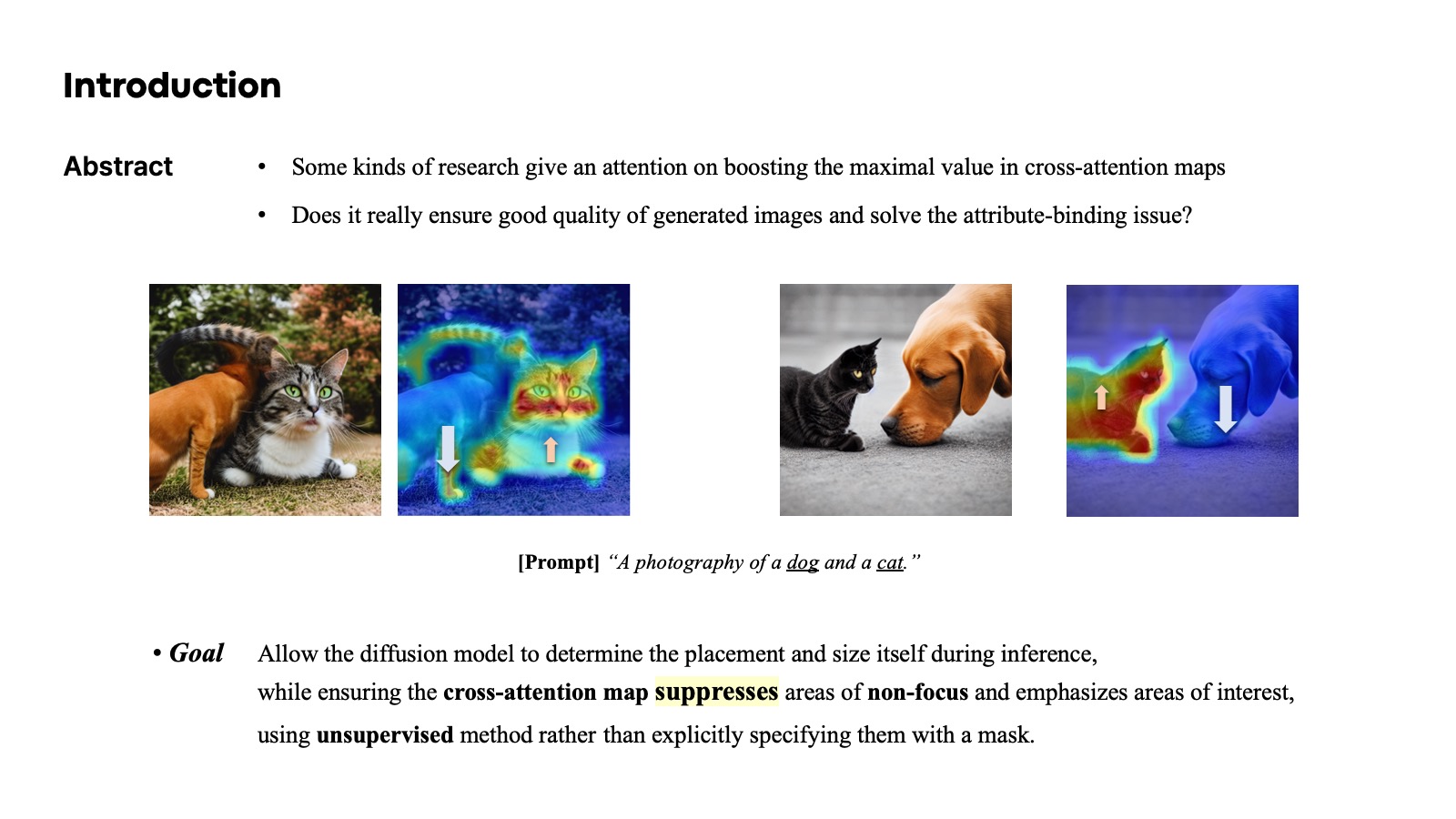

I am focusing on harnessing the power of diffusion models for conditional image generation. Essentially, I am aiming to guide the cross-attention to focus only on what’s vital by suppressing the less relevant parts in the cross attention map. To break it down, conditional image generation is all about creating images that align with the semantic or structural information of a given prompt, whether that be text, another image, or any form of guidance.

Currently, there’s a wealth of methodologies proposed that leverage stable diffusion to generate images as per user intent. Many of these techniques involve manipulating the feature map of the U-net architecture, the self-attention map, and especially the cross-attention map. Notably, many studies aim to maximize the cross-attention map in regions relevant to tokens from a text prompt. While this approach is essential, what caught my attention is ensuring that the parts in the cross-attention map that should be zero are definitively zeroed out.

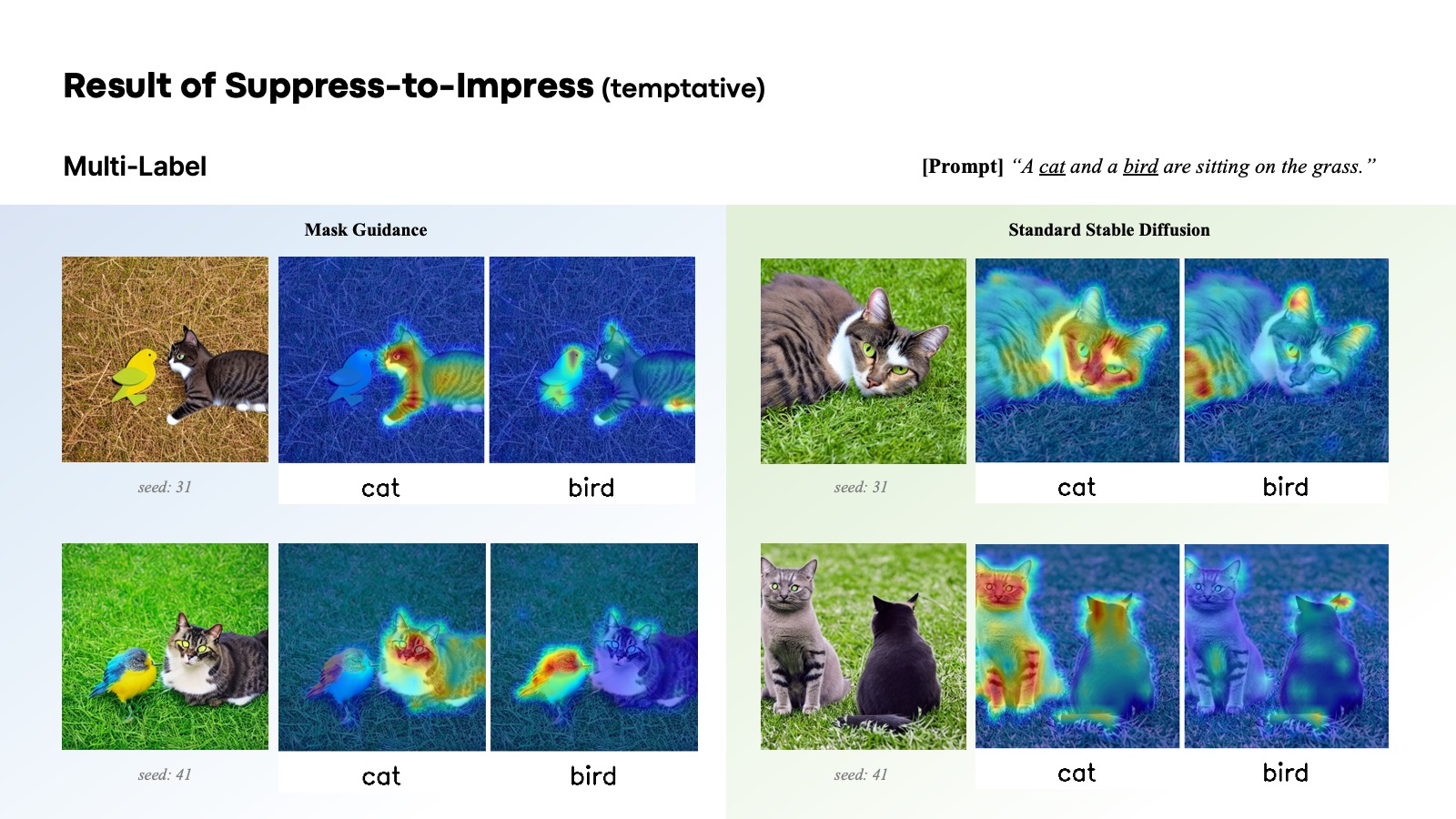

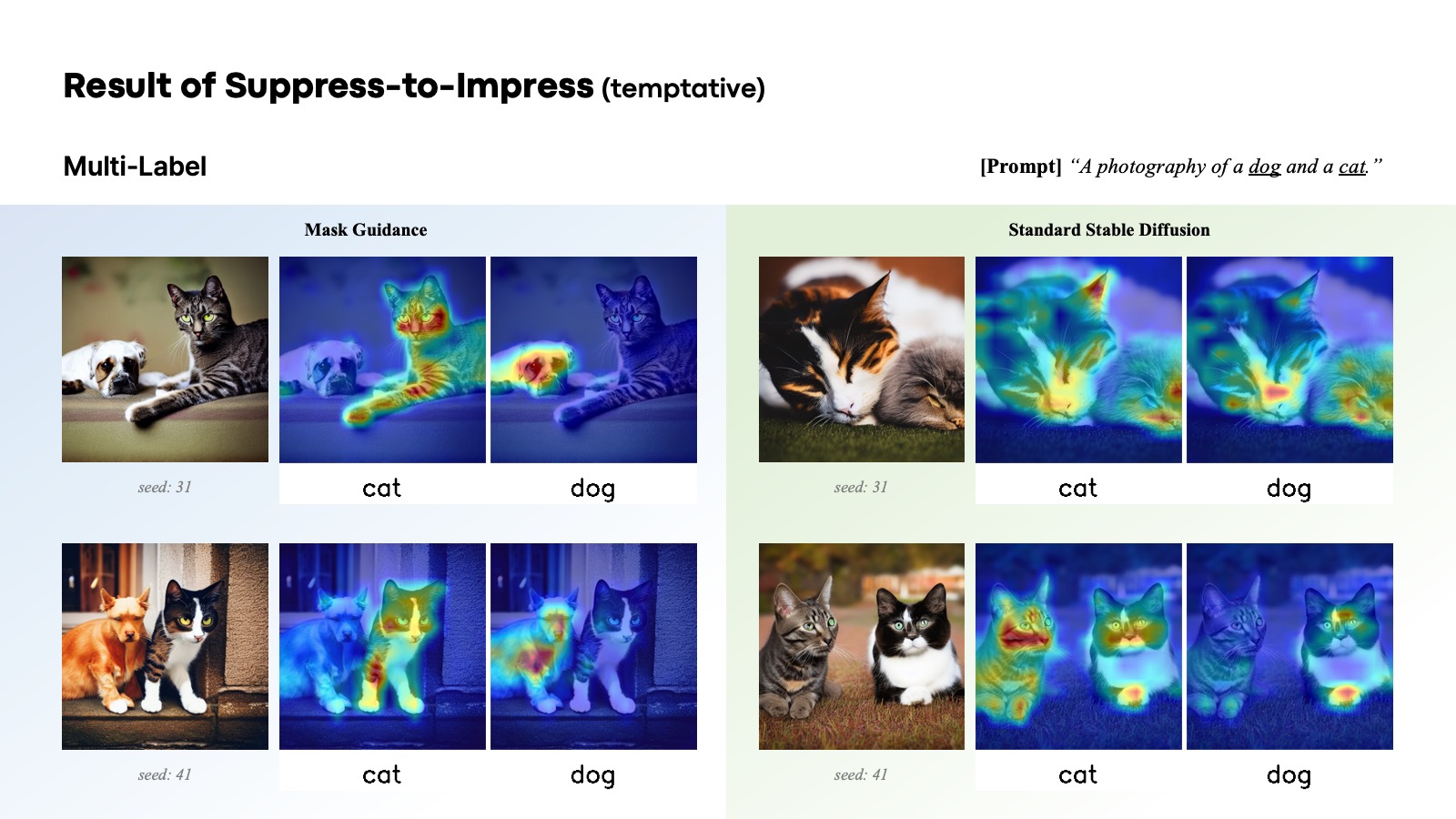

We introduce a new approach for addressing catastrophic neglect and attribute binding issues typically occurring in conditional image generation by Stable Diffusion. Contrast to other methods such as ‘Attend-and-Excite’, we are giving attention on attenuating the values of neglectable areas on the cross attention maps for the subject token. I believe that this could be the most important point on improvement in multi-labeled objects generation.

Goal

This approach aims to allow the diffusion model to determine the placement and size itself during inference, while ensuring the cross-attention map suppresses areas of non-focus and emphasizes areas of interest, using unsupervised method rather than explicitly specifying them with a mask.

Hypothesis

- The better the even distribution of activation values in the attention map across the target object to be generated, the higher the quality of the generated output.

- Addressing catastrophic neglect and attribute binding issues might involve ensuring exclusive activations in cross-attention maps for different subject tokens, minimizing overlap.

Method

TBD. I will present the methods once the project is completed.

Result

TBD. I will show experimental results from both a quantitative and qualitative perspective.

Miscellaneous

TBD. The ultimate goal of this project is to autonomously generate multi-labeled objects in high quality with unsupervised manner. To achieve this, I am delving into relative papers that puts forth methods for unsupervised semantic learning.